Or, I fought the machine, and the machine (has, temporarily) won.

In casting about for a good project using the Arduino’s analog outputs, I started letting my mind wander to one of its favorite pseudo-Luddite dwelling places, the thought that there are now generations upon generations of technology that we have abandoned as obsolete, but that have some unique traits that might be worth resurrecting, or, less pompously, that might be fun to play with. I decided the thing I wanted to do was to find an old machine, open it up, tweak it with the Arduino and make new life in the old shell.

And almost immediately the idea of the cassette deck became lodged in my head – an iconic piece of cultural technology that iterated through dictation, to “home taping is killing music,” to the Walkman, then to nothingness, replaced at every stage by more efficient digital methods.

I started thinking what I might do with a cassette deck. My mind wandered back to the awful racket of our Arduino sound experiment, with square waves of pure tone, and I started to think that one of the nice things about cassettes was that though they were noisy (hiss, flutter, wow) and not always very faithful, it was a smelly, dirty, human sort of noise, not the coldly rational death-blare of unmodified PWM. And I thought, let me make an analog synthesizer out of a cassette deck.

I looked around for an old Walkman, but after visiting several thrift stores, junk shops and antique stores, I couldn’t for the life of me find one. And then I walked into a 99 cent store, and sitting up at the top of the display behind the cash register was a Coby microcassette recorder in its original, now very yellowed, blister pack. I bought it (to the store owner’s tremendous surprise) and took it home.

My plan was fairly simple – I would record a simple tone using an online tone generator and the onboard microphone, and then modify the pitch by changing the tape speed, using the Arduino as power supply at as non-invasive a stage as would work.

And then I ran into my first snag. I popped in some batteries, put in the microcassette, hit play and record, and spoke into the microphone – “testing, testing.” And when I played it back, I got garbled nonsense.

I checked the batteries – 1.61 volts each. And then I plugged in a universal AC adapter, and got the same results as before – inconsistent speed, occasionally stopping completely, then going again.

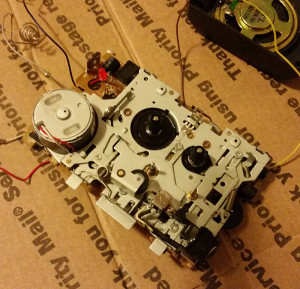

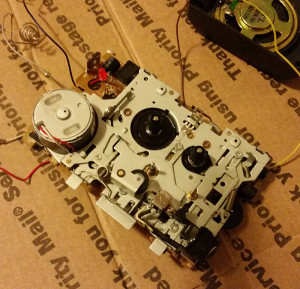

But at this point it was too late to get another tape player through eBay, and besides, I had even less hesitation now about busting the thing open to get at its guts. I unscrewed the five visible screws, used my wire cutters to shear away the pieces of the plastic housing that were fixed to the board, and pulled out the circuitry and mechanism.

I wondered if there was perhaps something funky between the batteries and the motor making the motor run oddly, so I found the motor’s leads and put 3V across them ( the smallest charge my power supply could deliver) – it sounded very smooth. I turned it over, and realized that though the motor was turning fine, the spindle was not, even though I had the “play” button (or what was left of it) engaged. I looked closer, and saw that the tiny drive belt running from the motor to the spindles was moving chaotically, and that seemed to be the problem. If I had to guess, I would say that whatever processes of heat and moisture, up by the ceiling of the 99 cent store, that had yellowed the packaging, had also degraded the plastic in the drive belt and/or the spindle’s gears to the point that it no longer worked. Or perhaps it was just a lemon to begin with.

But I also noticed that “rewind” worked fine. And by a lovely engineering quirk of the microcassette recorder genre, it was theoretically still possible to play back in rewind mode. I decided to go ahead with the project and just give it a shot.

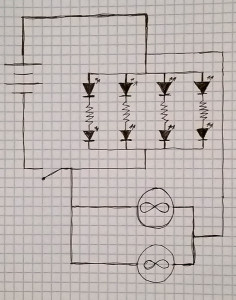

My plan then was to disconnect the motor from the rest of the circuit board and farm out control of that to the analog outputs of the Arduino. At the same time, I would power the rest of the circuitry (sound output) using the digital outputs of the Arduino. I would change the tone by modifying the speed of the motor (first experimenting to see what output number corresponded with what pitch) and allow rhythmic elements in by turning the circuitry on and off. Of course this posited that I would be able to run it well enough to record some tone onto the tape, but that seemed a secondary concern.

I got out my soldering iron, disconnected the motor, and soldered some non-tiny wires onto the ends of the leads. I then measured the resistance across the motor using a multimeter and soldered a fixed resistor of roughly similar rating to compensate for whatever power the motor might have drawn from the circuit. I soldered the speaker wires back to the internal speaker (they got disconnected in the great breaking-apart) and then ran 3V across the battery leads. A faint hum came through the speakers. I engaged the record head. The on-board LED came on!

Then I put the microcassette into its place, took my voltage and put it across the motor. The motor turned. I pressed “play.” Nothing happened. The drive mechanism had completely failed.

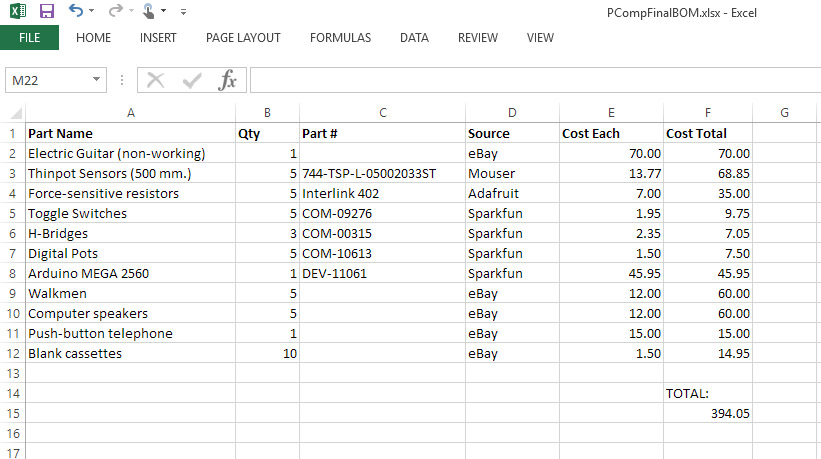

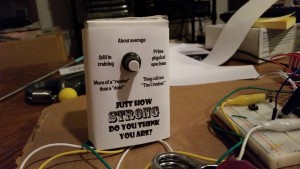

So I have essentially nothing to show for this project, though I think I am going to go ahead and order a couple of Walkmen off eBay, as they’re pretty inexpensive at this point, and I still think the idea is worth exploring. My hope is to begin by making a simple monotone synthesizer from one machine, and then try to gang together several to make a small “orchestra”. And of course, if I can get it to work correctly, the possibilities for both input (sensors, knobs, human interactions in general, as opposed to lines of code) and output (speech, ambient noise, instrument sounds as opposed to pure tone) are pretty exciting.

So watch this space!